Getting Started with LoRAs + Vodka Photorealism

A journey from first attempt to use a LoRA to training a photorealistic Vodka add-on

Hello, FollowFox community!

Today is our first post on the topic of LoRAs. As usual, we will detail the steps of this journey, used tools, parameters, datasets, and models.

tldr: we learn how to get started with LoRAs, from using to training them and in the process we created a photorealistic LoRA for Vodka models. Check out the LoRA here (link)

However, this post slightly differs from our usual in-depth and methodical approach posts. Our team at FollowFox loves LoRAs, and they have been doing a lot of amazing stuff with them. Meanwhile, I have never used a single LoRA all this time, and of course, I have never trained one. FOMO has been real and scary! Looking at the amazing things people can do and close to zero understanding of the space made that gap look very unapproachable.

So in this post, we will discuss how to approach the mission of catching up with things in the crazy, fast-moving world of generative AI.

Let’s set expectations on this post. These are the things that we will cover:

First time downloading and using LoRA’s from other authors

Training the first-ever LoRA following other people's guides

Training our high-quality, photorealistic LoRA.

Things that are NOT in this post:

Deep dive into how LoRAs work

Proper, detailed installation guide of LoRA training tools

A methodical and thorough experimental approach to finding optimal training parameters.

Recap on Vodka Series

Here is a quick recap of where we are for those just joining the general-purpose Stable Diffusion model series.

We have trained three versions of the general-purpose model called Vodka, which is distilled using synthetic data from MidJourney. Check Part 1, Part 2, and Part 3.

And we have a whole roadmap planned:

Upcoming Roadmap

Vodka Series:

Vodka V3 (complete) - adding tags to captions to see their impact

Vodka V4 (in progress) - addressing the ‘frying’ issue by decoupling UNET and Text Encoder training parameters

Vodka V5 (data preparation stage) - training with a new improved dataset and all prior learnings

Vodka V6 (TBD) - re-captioning the whole data to see the impact of using AI-generated captions vs. original user prompts

Vodka V7+, for now, is a parking lot for a bunch of ideas, from segmenting datasets and adjusting parameters accordingly to fine-tuning VAE, adding specific additional data based on model weaknesses, and so on.

Cocktail Series:

These models will be our mixes based on Vodka (or other future base models).

Bloody Mary V1 (complete, unreleased) - Our first mix is based on Vodka V2. Stay tuned for this: Vodka V2 evolved from generating good images with the proper effort to a model where most generations are very high quality. The model is quite flexible and interesting.

Bloody Mary V2+ (planned): nothing concrete for now except for ideas based on what we learned from V1 and improvements in Vodka base models.

Other cocktails (TBD) - we have plans and ideas to prepare other cocktails, but nothing is worth sharing for now.

LORAs, Textual Inversions, and other add-ons:

We have started a few explorations on add-on type releases to boost the capabilities of our Vodka and Cocktail series, so stay tuned for them.

The Plan

As usual, we start with a plan. The goal was to get up to speed on LoRAs as quickly as possible. Our preferred approach is practical and hands-on, with the idea that we will go deep if we like the process and the results. So this was a rough outline:

Download and try a LoRA from somewhere, use it, and see what happens.

Fine-tune the first LoRA, find the easiest path to this

Fine-tune our LoRA with a specific goal and see how far/close it is.

Decide what’s next

Based on your feedback on #3, we had a pretty good idea. On Vodka models, we have received feedback that it struggles to do well with LoRAs, and we all know that photorealism is not its strongest side. So the goal is to try and train a LoRA for Vodka V3 that works with it and addresses the realism issue.

Downloading and Using a LoRA

Image Generation Tool

we will be using local Automatic1111 installation. Check our guide on how to set it up on Windows WSL2 (link)

Downloading a LoRA

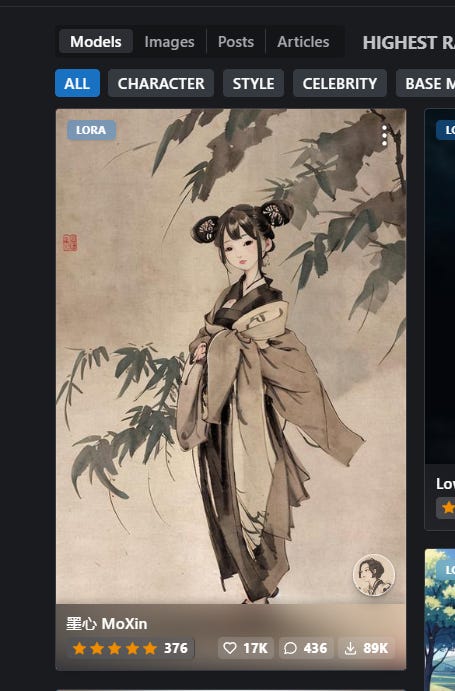

You probably know CivitAI - a website that hosts a very large amount of Stable Diffusion-related models. And it is full of LoRAs. So we sorted the most popular ones and downloaded the first one that looked interesting: MoXin.

Once downloaded (~144MB file), place it under \stable-diffusion-webui\models\Lora folder in Automatic1111 installation.

Now we can launch the Auto1111.

Generating Images with LoRAs

Now all we need to do is to apply specific words related to the LoRA to our image prompt. Automatic1111 has a useful tool to help with this process. Pressing this little button lets you see all your additional models in UI. If you select LoRA, we see the just downloaded model there.

Clicking it adds the syntax needed to use it to your prompt. Additionally, you can press the little information icon and see useful data on how the LoRA was trained.

On top of that LoRA activation syntax, these models usually come with one or more trigger words. For this particular LoRA, we can see the list on CivitAI’s model card.

We selected one randomly (“bonian”), wrote our prompt, and pressed generate.

It all worked like magic! Here is a comparison of the images. Vodka v3 without a LoRA, Vodka with LoRA, and finally, SD1.5 with the same LoRA.

As we can see, LoRA is doing its job. And in fact, sd1.5 images look closer to how the LoRA seemed to look on CivitAi, indicating that maybe, in fact, Vodka is more resistant to them. We won’t do a deep dive here; keep this in mind.

Fine-tuning our first LoRA

Now that we understand how LoRAs apply to the image generation process let’s move to a more interesting part - training our one!

The first goal we had in mind was to figure out a way to train LoRAs as fast as possible and then use that approach to our specific needs.

Almost any Stable Diffusion fine-tuning or training process has three core parts (applies to most AI tasks as well):

Preparing a training dataset

Having a tool or code that will be used to train the model

Choosing the parameters to accomplish your goal.

We started figuring these out by simply googling “train LoRA guide,” we quickly skimmed a few posts. And as we were doing this, we quickly found a gold mine!

On CivitAI, a tutorial from the user called konyconi called “Tutorial: konyconi-style LoRA” (link). What’s special about it is that it comes with the dataset used in the tutorial and a configuration file with the training parameters. This means that our task of training the first LoRA became so much simpler - we had two out of three parts required for the training done. What we were missing is the tool to train the LoRA.

Special thanks to konyconi for sharing all this and making this space accessible to more enthusiasts. Ensure to follow their profile (link); they post an insane amount of high-quality content and trained LoRAs.

So the next step was to find a tool for training and install it.

Installing kohya_ss GUI

After more googling, it was apparent that kohya repositories are the most popular for fine-tuning LoRAs. There are a few versions available, and we decided to use the most popular GUI version (link) as there seems to be a lot of resources about it, and GUI is just a more intuitive way to get started.

The first attempt was to set it up on WSL2, but we faces some dependency issues. Figuring this out is important, but we decided to skip it for now since it can become an insanely time-consuming rabbit hole.

The second and most successful attempt was on Windows. We decided that drive D: will be the installation directory, and with a simple pull command, downloaded the repo:

git clone https://github.com/bmaltais/kohya_ss.git

Next, we made sure all dependencies are met.

And to start the installation, launched the setup.bat file from the downloaded folder. In the dialog window, we selected 1, it launched the installer, and it was all successfully done after a few minutes.

To finish the setup, in the repo folder, we created a few folders as the destination for various files:

inputs - a folder where we will place our datasets

pretrained_models - a folder where we will place starting models (Vodka, sd1.5, etc) that LoRAs will be trained on top of.

trained_models - a destination folder for trained LoRAs.

Launching the first training

We launch the trainer using the gui.bat file. A cmd window will pop up and, after a bit, launch the WebUI on the local server; in our case, the address was http://127.0.0.1:7860

It launched a Gradio interface with a bunch of tabs and options in it. It is a bit overwhelming at the start, but also comforting to notice options and parameters we are familiar with from other training methods. For a start, we navigated to the LoRA tab.

We wanted to use the configuration file from konyconi, so we downloaded it (link) and placed it in D:\kohya_ss\config_files folder. Then selected and loaded it from the interface.

To do so, press the little arrow dropdown saying “Configuration file” and then “Open” to select the config file we downloaded.

The first thing to do is configure the Source model tab by selecting the model on top of which we will be training. We placed Vodka.safetensors (link) checkpoint in “D:\kohya_ss\pretrained_models” folder and then selected it from the GUI.

Next, we move to the “Folders” tab to configure it.

The image folder is where we put our dataset. In this case, we downloaded the dataset from konyconi (link), extracted it, placed it in the folder, and selected it from GUI.

tip: we were getting a weird error here, and turns out that the subfolder in the inputs folder should be named in a specific name where it starts with a number followed by an underscore. So something like “10_boho” or “12_portraits”. update - turns out the value ahead of the underscore determines the number of repeats; that’s why it didn’t train without it.

For the output and logging folder, we just selected the folders we created and finally chose the Model output name to be BohoAI, to follow the naming convention of the author.

The last step is to configure the Training parameters tab. In this case, skimmed it, the parameters more or less made sense, and we hit the Train model button!

Tip: save this config file with your custom name for reuse in the future. Otherwise, you’ll have to keep doing this folder configuration setup repeatedly.

The training launched with no issues, and after about 30 minutes, “D:\kohya_ss\trained_models” folder was full of 15 LoRA models. The last one is the one that has no epoch number on it, so we grabbed the BohoAI.safetensors file and copied it to our Automatic1111 models/Lora folder.

We did minimal testing here, but it all seemed to work. A more proper way of doing this step would be training it on top of SD1.5, then downloading the LoRA from the author (link) and comparing the two outputs to ensure they are the same.

Creating Photorealistic LoRA for Vodka V3

Finally, we got to the important part of making our custom LoRAs. If we go back to our three requirements for doing this (training data, tool, and parameters), we again have two out of three. We have the kohya trainer installed, and we will reuse the parameters. However, we are missing a dataset, and that’s where we will start.

We don’t know what an optimal dataset would be here, how many images, how resolution and aspect ratio impacts training, whether we should aim for a consistent type of images or diverse ones, how captions impact the results, etc. So as not to get stuck, we just decided to mimic an example that worked. That dataset contained ~50 images with super simplistic captions that started with a keyword.

We decided to create a somewhat consistent dataset, and instead of including all kinds of photos, we selected 50 high-quality portrait-type photos. Then, we manually created the caption text files. You can find the whole dataset here (link).

We updated the inputs folder (removed the Boho dataset and added portraits. Updated the model name in the trainer to vodka_portraits and launched the trainer. After ~30 minutes, we had our LoRA ready and placed in Automatic1111 for testing!

Results

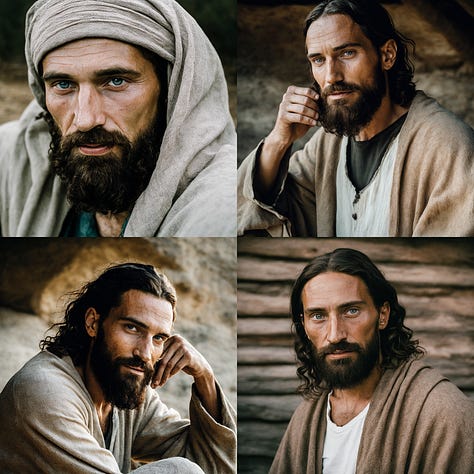

The results were truly surprising for such a low-effort fine-tuning. Now Vodka can generate some very high-quality realistic photos. It’s not perfect and doesn’t work on all generations, but results look amazing when it works. Let’s take a look at a few examples:

On portraits, we saw the improvement, and in many ways, it successfully addressed the issues we had with the Vodka series (realistic photos looking burned or plastic).

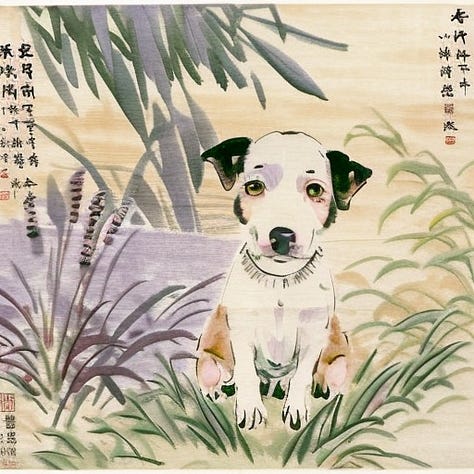

However, it was surprising that Vodka suddenly started generating realistic photos even for non-portrait generations. Remember that our dataset only contained human portraits. It almost felt like this LoRA unlocked the capability of photorealism that was already there from inside the Vodka model.

And this nature shot confirmed its ability to generalize beyond portraits once again.

Overall observations so far:

The model can generate very high-quality photorealistic images

It also introduced many issues; in some generations, fingers are now extra deformed, and we observe some forgetting of subjects they knew before.

The model's diversity went down, generating more similar images within the same prompt.

Homework and Next Steps

Overall it was a very fun journey, and we created a LoRA that is fun and useful.

I hope this post is useful for you to get started with LoRAs and to adapt the approach we used to get up to speed on some topics quickly. And now that we like this space and see it has a lot of potential, it is time to recognize that there is much more to learn and try. That’s why we like to document some next steps and homework that we need to do to start climbing this exciting learning curve:

read the paper about the LoRA method https://arxiv.org/abs/2106.09685

Do a proper WSL2 setup

Practice - train more LoRAs

Start structured experiments to understand what are the things that matter in the process

Keep watching what others are doing and stay up to date.