Riding the Bomb: AI's War on White-Collar Jobs

Thoughts about intellectual labor's imminent devaluation – and a strategy to defend against it

TL;DR: AI is poised to hit white-collar jobs with nuclear speed, and history warns us not to trust easy reassurances. Forget distant AGI; today's AI tech stack is already a game-changer. This sobering essay unpacks the imminent threat, explains why jobs lost may never come back, and offers a strategy (plus 10 practical suggestions) to prepare for the AI storm.

“My opinion is decidedly that the power-loom will never be able to produce the greatest part of the weaving now carried on in the borough of Bolton, in a way that will give encouragement to the employment of capital for that purpose.”

– John Fielden, Esq., MP, March 12 1835.

“So here is the unpleasant truth: AI is coming for your jobs. Heck, it's coming for my job too. This is a wake-up call.

It does not matter if you are a programmer, designer, product manager, data scientist, lawyer, customer support rep, salesperson, or a finance person - AI is coming for you.”

– Micha Kaufman, Founder and CEO of Fiverr, April 08 2025.

Two hundred years ago, countryside England was in social turmoil.

In the cottage workshops of Bolton, a once-bustling industrial town near Manchester, hand-weavers had been transforming fine cotton yarn into calicoes and muslins for centuries – powered by their muscles alone, foot treadles driving each shuttle through a rhythmic day’s work. Starting in the 1790s, a series of mechanical innovations would soon put these communities into an impossible ordeal. With the use of waterwheels and, soon after, coal-fired steam engines, a few entrepreneurial shop owners figured out that yarn could be spun using an external energy source, heralding the advent of the so-called “power-loom”.

These shops were suddenly unencumbered by the limited motion that human limbs had long provided. A single factory, minded by an inexpensive attendant, could produce several multiples of the cottage output at perhaps one-third the cost; wages that had stood near twenty shillings a week in 1810 collapsed to barely six by the mid-1830s, even as cloth prices tumbled across Lancashire markets.

As rents and bread prices refused to fall in sympathy, desperation would take varied forms: midnight attacks on mill windows, hunger-driven riots at corn depots, and a barrage of petitions that stacked inches high on the clerks’ desks in Westminster. Local justices, alarmed magistrates, and mill owners alike pressed Parliament to investigate the spreading distress. The result was the House of Commons Select Committee on Hand-Loom Weavers, convened in 1834 and renewed in subsequent sessions, where witnesses described empty looms, pawned furniture, and workshops reduced to silence for want of orders.

Their testimony would frame the policy debate over whether – and how – legislators might temper the harsh arithmetic that powered machinery had imposed on Britain’s most venerable craft.

John Fielden was one such legislator, and co-wrote a long report to Parliament on the plight of the hand-weavers. A brilliant and influential industrialist, John came from a rich family from neighboring Todmorden, whose wealth was also built on the textiles trade. Here is a man that thoroughly understood the market, with experience in managing factories and real skin in the game of textiles manufacture.

In his summary report to the Parliament, Fielden writes the following:

“Of the combination of causes to which the reduction of wages and consequent distress of the Weavers may be attributed, the following appear to be the most prominent:

1st. Increase of machinery propelled by steam.

2d. Heavy and oppressive taxation, occasioned by the [Napoleonic] war.

3d. Increased pressure thereof, from operations on the currency, and contractions of the circulating medium in 1816, 1826 and 1829.

4th. The exportation of British yarn, and foreign competition created thereby, from the increase of rival manufactures abroad.

5th. The impulse given, by low wages and low profits, to longer hours of work.”

– Report from Select Committee on Hand-loom Weavers' Petitions: with the minutes of evidence and index: ordered, by the House of Commons, to be printed, 1 July 1835, page XV

He went on to expand on each of the particular causes, doing a brilliant and compelling investigation on the factors underlying them. In Fielden and his colleagues' view, the principal cause of the workers' plight without a doubt lied on monetary policy – in particular, Britain's return to the gold standard in 1819 after the turmoil caused by the Napoleonic wars.

Based on the investigation, the report presented the following suggestions to Parliament:

establishment of a school of the hand-weaving trade, to improve their expertise and help manage the labor supply by guiding workers away from overcrowded trades;

establishment of local boards of trade to mediate disputes, assist worker mobility and job placement;

changing of labor regulations to make it cheaper to hire indentured apprentices;

implementing exact specifications for manufactured cloth pieces to ensure consistency more effectual legal protection against embezzlement by workers;

tax breaks on bare essentials such as malt, hops, sugar, and soap.

It is striking, for a modern reader, how Fielden and his colleagues missed the mark on the root causes of the hand-loom weavers' issues. To their credit, the report did recognize the power-looms as a negative new factor to the labor market, but the stated experts' opinion as written on the report was that the ongoing mechanization of labor would always leave room for a (presumably expanding) niche of high-quality artisans working on high-end production.

The report's recommendations, assumed to be constructive actions for the long-term welfare of the workers, were a palliative treatment to a terminally-ill segment of the labor market. While power looms in 1835 may still have had limitations regarding certain fine or complex weaves compared to skilled hand-weavers, the technology relentlessly improved, eventually decimating the hand-loom weaving trade across almost all its segments.

Ironically, John Fielden multiplied his family's fortune thanks to the very same technology he downplayed, with his company, Fielden Brothers, eventually becoming the world's largest single consumer of cotton. In 1803, there were only 2,400 power-looms in the UK. by 1820, there were 14,650; by 1829, 55,500; by 1833, 100,000; and by 1857, over 250,000. This was clearly an exponential process, and its consequences were far more relevant than Fielden and the other experts could realize at the time.

On modern-day hand-weavers

England in the 1820s was seeing the debasement of physical labor as a source of income. I believe the world in the 2020s is seeing the start of the debasement of intellectual labor.

Whereas power-looms are a prime example of mechanization of blue-collar work, Large Language Models are a prime example of mechanization of white-collar work. With AI, the current era's hand-loom is a Personal Computer. Its jiggs and tools are VS Code, Microsoft Office, Google Docs, Quickbooks, and the like. The yarns and cloth pieces it spun are codebases, strategy slide decks, legal tax memos, government forms, compliance reports or financial spreadsheets.

With ChatGPT/Claude/Gemini or agents like Manus or Cursor, work that currently occupies an eight-hour day is already achievable in a fraction of that time. This “intellectual work deflation” creates a nasty incentive to just not work – today's work at today's value diminishes if tomorrow promises the same output for significantly less effort. It is not entirely clear how sustained motivation can persist under such a rapid deflationary pressure on the cost of intellectual output.

Software engineers, corporate lawyers, DMV employees, lab technicians, business analysts, middle managers and radiologists are the hand-weavers of our time, under threat by a relentlessly-improving new technology. But if this likening already points to a potentially bleak future, I propose that it may be even worse than it seems.

As my late professor Clay Christensen used to teach, there are, from a macroeconomic standpoint, three main types of innovations: empowering innovations, which transform expensive products into affordable ones; sustaining innovations, which replace older product models with new ones; and efficiency innovations, which reduce or simplify the delivery of something. Empowering innovations and sustaining innovations typically create jobs; efficiency innovations, however, destroy jobs.

The power-loom analogy breaks chiefly due to the nature of the innovations in each situation. With regards to the power-loom, the mechanization of blue-collar work meant that its outputs became exponentially cheaper and more abundant. The output of a textile factory is clearly valuable and improves/enriches the lives of people obtaining it; over time, improved access to textile goods opened up whole new markets that, in their turn, created brand new jobs and value opportunities. As such, the power loom is a textbook instance of an empowering innovation, which, albeit unfortunate for the hand-loom weavers, had hugely net-positive effects for the labor market when all sectors are considered.

AI, on the other hand, may not behave like that. While white-collar work is incredibly diverse, a large contingent of it delivers services that are inelastic by design. You don’t need more than one driver’s license; your company won’t get any richer by binging on compliance audits; your day won’t be getting much easier if you hire five chiefs of staff. These are clerical workers, roles which are typically meant to promote efficiency within an organization’s processes. According to Gemini 2.5 Pro, clerical jobs is the largest major occupational group in the US employing a whopping 12.2% of the workforce, with other white-collar workers dedicating about a third of their time to work that is clerical in nature.

Mechanization of clerical tasks will increase profits of the companies that do it, but in contrast with mechanizing blue-collar work, there shouldn’t be a lot of new markets created from the reduced price of clerical work. It’s likely that GDP won’t see gains from it; in fact, it may even shrink as more clerical jobs are closed without other employment alternatives being created. Making matters worse, white-collar workers are ultra-specialized – it’s not trivial to, say, retrain displaced DMV managers to have them work as nuclear engineers or cardiologists.

In this light, future historians may deem AI as the most powerful efficiency innovation ever created; and if that proves true, then a lot of people are about to be severely hurt.

Forget AGI – all you need is tool use

The typical AI doomer is afraid of a super-intelligent Skynet AI, when actually the MVP required to upend social order is as simple as an LLM with tool-use access to an email account.

By way of context, “tool use” is a term of art for the ability of an AI to call other programs and make them do things – for instance, if an AI runs a search query on Google, it’s doing so by means of a tool it used to run the search. But LLMs are only next-token prediction machines; they don’t access any tools at all. What actually happens is: in a system prompt (which is a prompt that’s hidden from the end user), an LLM received instructions from its creators explaining what tools it can use and when it may be useful to call them (the LLM was also typically fine-tuned to know which tokens it should print to call the appropriate tools). Whenever the LLM is responding to a user message, there’s a supervisor script running on top of the LLM acting as a middleman between the user and the LLM, being the first to receive the LLM’s output. Should this supervisor script identify an output containing a tool use request (for example: “<tool_search>FollowFox AI</tool_search>”), it then (1) stops the LLM run, (2) sends the contained request to the appropriate tool (in the example, it sends the term “FollowFox AI” for the “search” tool to process), (3) feeds the results of the tool back into the LLM as an additional prompt, and (4) restarts the LLM’s run until it finishes its processing.

Say for example you work in a big corporation managing its quarterly budgeting process. Your typical work consists of asking inputs from P&L managers, aggregating them in some spreadsheet or document, and maybe making some followup requests for clarification. You apply some level of critical thinking on the data received, honed by your years of experience and the directives you received from people above your pay grade. Once in a while, you create a report for your direct supervisor on the status and quality of the data.

For you, AGI is already here – it’s Claude with Computer Use and some minimally clever control software. Instruct it on who’s who in the company, give it a list of email accounts to write to, and let it do all the process for you. Hell, you can even give the AI a human-like persona, some fictitious backstory, and no one will ever notice the budgeting email wasn’t sent from a human being. Sure, a similar killer app hasn’t been out yet, but we are technically there already. Give it a few months and an API wrapper will show up that does exactly that, and boom – your job was made redundant. The app will do your job sans the water/bathroom breaks and poor excuses for procrastination to your boss.

Consider that, just this week, Google’s team disclosed that Gemini successfully completed Pokemon Blue, reaching the game’s end credits. That is to say, the LLM played the game as a user would, understood its rules, and successfully caught all the pokemons. This is not trivial at all, and I’d posit that most of the world’s intellectual work is less complicated than understanding, planning for, and executing on this task.

The truth about AI is that AGI, short for “artificial general intelligence” – as in, an AI capable of understanding/learning any intellectual task that a human being can –, doesn’t matter for most practical things. Of course, the power-looms didn’t automate every type of textile product, but eventually newer, specialized systems did. You didn’t need a general-purpose textile manufacturing system to bring about an upheaval in English society. All you needed was a functional application that structurally improved the unit economics of some niche product, and then work from that point on to broaden the application towards other textile products in the value chain.

The first instantiation of this process is happening with code creation. Agents like Cursor, Wildsurf, and Microsoft’s Github Copilot are the power-looms of software development. From a tech stack standpoint, there is nothing special about them: they are API wrappers for Claude/ChatGPT/Gemini, built on top of VS Code (an open-source code editor), with tool-use access to the repository being edited. But the insane demand for them, and the frothing VC money piled on top of them, demonstrate the thirst for, and value created by, software development mechanization.

The postmoney valuation story of Cursor is particularly revealing: from around $20 million in its seed round in October 2023, to $400mm in a Series A in August 2024, then to $2.6 billion it its Series B in December 2024, and now to an astonishing $9 billion in a Series C announced this week. Windsurf, as it turns out, just got bought by OpenAI for the sweet price of $3 billion, after being valued at $1.25 billion in August 2024’s investment round. (The open-source universe also sees a lot of attention, as evidenced by Cline, an OSS coding agent with over 1.4 million installs worldwide.)

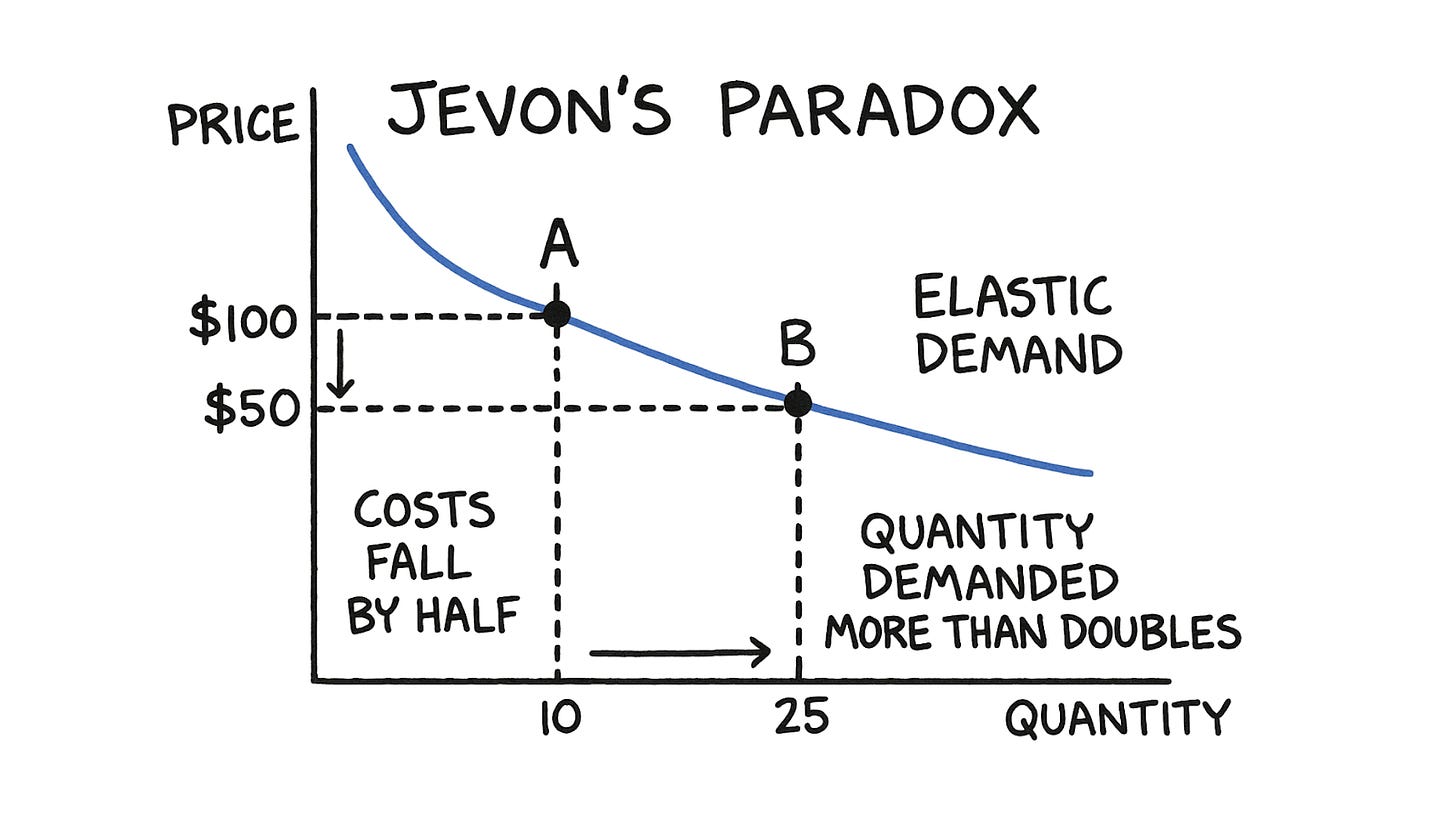

It’s only natural that the first killer apps for agents would involve automating software creation, since it’s the market sector that created them in the first place. The good news is that software development is the principal white-collar market that may see AI as an empowering innovation, to the extent that it redefines what it costs to create and deploy great software, everywhere. It may be an area where Jevon’s paradox (Figure 2 below) holds – that is to say, a steep reduction in the price of software may increase software revenues, if the demand for software rises even faster than software prices falls (that is to say, if the demand for software is elastic).

But even if Jevon’s paradox holds and overall software revenues shoot up, it is not clear what happens with the legions of software hand-weavers made redundant by these superpowered autocomplete machines. These people work for companies with specific mandates and goals to fulfill, and there may be a thing as a “good enough” software toolkit that doesn’t require much more than what it already does. Moreover, AI will continue improving, and as new markets for software are created, it’s reasonable to assume that newer, more capable AI systems could outcompete the displaced professionals for the jobs they could eventually create. For all we know, we might be living in a world where even software development (as a labor category) is doomed, with the sector playing the part of the canary in a coal mine.

The English hand-weavers’ plight was immune to upskilling the laborers; it wasn’t resolved by finding hand-weaving jobs elsewhere; it ran its course and impoverished hundreds of thousands. Indeed, together with other mechanization innovations like automatic threshing machines and mechanical reapers, the labor market wipe-out that mechanization of physical labor generated was “solved” by starvation and mass immigration.

What would be the equivalent solution in the modern world, once intellectual labor is mechanized? Are we to send our tired, our poor, our huddled white-collared masses to Mars? Is that how we become multiplanetary after all?

The case of Fiverr

Last month, Micha Kaufman, founder and CEO of Fiverr, wrote an email to his employees that might well become a historical document. I emphatically recommend you to read his message.

In it, Kaufman declares: “AI is coming for your jobs. Heck, it's coming for my job too. This is a wake-up call. It does not matter if you are a programmer, designer, product manager, data scientist, lawyer, customer support rep, salesperson, or a finance person – AI is coming for you. (...) If you do not become an exceptional talent at what you do, a master, you will face the need for a career change in a matter of months. I am not trying to scare you. I am not talking about your job at Fiverr. I am talking about your ability to stay in your profession in the industry.”

This guy owns a billion-dollar, publicly-listed, multinational online marketplace for freelance services – a company he's been running for the last 15 years, and that, according to Gemini 2.5 Pro, commands over 7% of the global freelance platform market. In this brave new world, freelancers are to Blockbuster what AI is to Netflix. Kaufman really has skin in the game; if he says something like this, we’d do well to listen.

You might already see where I’m getting to with this: if white-collar workers are today’s hand-weavers, then Micha Kaufman is a prime candidate for a modern-day John Fielden. I see three reasons why this is so.

First, the main recommendations from both men are the same: workforce, upskill yourself!

Fielden believed that upskilling the hand-weavers in new schools of art and facilitating worker mobility across different factories was key to secure their occupation and economic position. Accordingly, Kaufman emphatically states that his workforce needs to learn to use AI, by any means possible. If the scenario I’m painting in this essay is right (that is to say: AI, being the mother of all efficiency innovations, will kill white-collar jobs like nothing else ever did), then it’s to be expected that Kaufman’s suggestions to his workforce will be as palliative as Fielden’s were to address the hand-weavers.

Second, both Kaufman and Fielden put the key element for individual employee success as high agency.

For Fielden, high agency is implied throughout his report as a necessity for any successful hand-weaver. In the case of Kaufman, on the other hand, requests for the employees to “pitch your ideas proactively” and to “stop waiting for the world or your place of work to hand you opportunities to learn and grow – create those opportunities yourself”. For the impoverished hand-weavers, higher agency (in the form of seeking education, engaging with boards of trade, or even immigrating) meant the difference between starvation and survival. In the case of Kaufman, I believe he left unsaid the true message he wished to convey: most of you will be fired, very soon.

Third, underlying the bleak labor panorama observed by both Fielden and Kaufman, there is a huge value opportunity for their own business.

Both men saw the writing on the wall, leveraging on the very engine of their workers’ misery. Fielden installed ever-faster power-looms in his own mills and parlayed the resulting cost curve into what became the world’s largest cotton business. Kaufman’s equivalent move started in February 2025 with the launch of Fiverr Go, a suite of AI tools trained on, in his words, “an unparalleled foundation of data from 6.5 billion interactions between customers and talent.” By distilling those interactions into branded Personal AI Creation Models and a Personal Assistant that “knows the talent and how to best represent them,” Fiverr bottles each freelancer’s craft inside a model and rents it back to clients at cloud speed. Like Fielden, Kaufman is embracing the machine that threatens his workforce, but ensuring it spins profitably inside his own factory.

The unresolved question for Fiverr is whether Jevon’s paradox holds for what they sell. Jevon’s paradox eventually held in the case of textiles in the 19th century, but as I previously mentioned, there’s no guarantee that it will hold for intellectual work two centuries later. As a market-maker for these professionals that charges a take rate on their revenues, Fiverr loses money when the unit cost of intellectual work falls – in contrast to Fielden, who, as the owner of a textiles manufactory, directly benefited from reducing manufacturing costs. For Fiverr to survive and thrive, it needs to grow sales volume faster than the unit cost of freelancing work gets reduced, and only time will tell if that’s an achievable goal.

Regardless of Fiverr’s fate, the fact that Fielden-Kaufman parallel holds so keenly is yet another harrowing omen for the white-collar job market.

Dr. Strangelove and the AI doomsday device

Let’s come back to the bigger issue. I made the following assertions so far: (1) AI is the mother of all efficiency innovations and may wipe out whole swathes of white-collar jobs; (2) while all white-collar workers are at some level of risk, clerical jobs are the most fragile to it and likely won’t come back once they are lost; (3) there is no need for AGI for all that – the essential technology to wipe them out is already there, under the form of LLMs with tool-use; (4) software development may be the canary in the coal mine and is likely to be affected first; and (5) there’s no way to run from this, and past remedies to labor shocks (eg. upskilling or mass immigration) should not work.

Assuming these points to be valid, what remains to be discussed is the speed of the change that should be hitting us.

The consensus today is that software engineering will feel this change really fast. For instance, Dario Amodei, CEO of Anthropic, in a talk last March 10th, stated he believes that AI should be writing 90% of the world’s code in 3-6 months (meaning it would happen anywhere between June and September), and then within a year, AI could be writing all code. Microsoft and Meta’s leadership agree with this view – in a conversation on LlamaCon 2025 (which happened just two weeks ago), Satya Nadella stated that 20-30% of Microsoft’s code is already being created by software, and Mark Zuckerberg shared his belief that 50% of software development will be done by AI in the next twelve months. Now bear in mind that these percentages were zero just two years ago. For other segments of white-collar jobs, there’s less evidence available to assess the speed of job destruction; that said, Kaufman seems to believe this is starting within mere months, and there is surely a pathway for that to be the case.

But all commentary made by these thought leaders is tempered with a version of Fielden’s folly: somewhere in their statements they claim that AI cannot automate all work, that true masters in their fields will always be on demand, that a human touch will forever be needed, especially with regards to high-end work. History showed how this argument failed to hold for hand-weavers and for countless other blue-collar occupations in the centuries after that. History doesn't repeat itself, but it often rhymes, and the rhyme here seems clear: even if AI can’t automate all work now, it will relentlessly improve, the number of exclusively human occupations will shrink, and it will do so most likely faster than anyone would dare to publicly guess today.

Peachy scenario. What, then, is a strategy to prepare for the doomsday case that Kaufman is right, jobs evaporate everywhere, and it happens faster than anyone says it would?

We listen to Dr. Strangelove’s suggestion, and, in the process, we learn to stop worrying and love the bomb.

The movie Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb (yes, that is the full name) is Stanley Kubrick’s masterpiece of political satire from 1964. It was aired at the high point of the Cold war, a year after the Cuban Missile Crisis, and it told the fictional story of a crisis created by a mad US general that launched an unauthorized nuclear attack on the USSR. Woven into the dark, way-over-the-top comedy of the film is the notion that, in the midst of an insurmountable calamity, once people finally abandon seeking for an impossible solution, they will be motivated by their basest, most selfish interests.

In perhaps the most famous scene of the movie (the one that justifies its lengthy name), the Soviet doomsday device is primed and an American bomber is about to nuke Russia, condemning the whole world to a fiery nuclear holocaust. When all hope is lost, Dr. Strangelove – a disabled Nazi scientist that now works for the US government – proposes the idea of a fallout shelter under a mine shaft, whose population would include all leaders present in the US Government’s War Room (all men), as well as young, beautiful women at a ratio of 10 women for every man so that they could repopulate the Earth. Suddenly the mood in the War Room swings, and the Soviet Ambassador comments with a half smile: “I must confess you have an astonishing idea there, Doctor.”

Looking past the surreal immorality of the idea proposed (which was scripted exactly due its absurdity), a key message of the movie that’s left unsaid is: regardless of whether you’re tempted or disgusted by Dr. Strangelove’s psychopathic idea, the only guaranteed method of survival was to be inside the War Room.

That being the case, then one should take all possible steps to get inside it. That is to say, if the AI doomsday device for jobs is about to be activated, and if one desires to survive the economic apocalypse, one needs to follow whatever steps maximize the chances of being a leader in the field of AI.

Entering the War Room

The obvious method of being admitted into the War Room is to start a successful AI business; but entrepreneurship is not for everyone, and failing is the most likely outcome even for the most well-prepared. AI is the golden opportunity for startups, but this path is naturally closed for 99% of people.

The second most effective way is to become knowledgeable on all aspects of AI. Knowing all there is to know about it; following every new development; reading papers; tinkering with it. Fundamentally, you should strive to lead AI adoption inside whatever organization you are currently employed/involved. For that, you should dedicate all of your discretionary time to do learn about it; free yourself of any work commitment you may be able to and use that free time to learn AI.

It is only through this grim lens – learning AI is a stratagem to be admitted into the War Room – that I can understand the recommendation to upskill. Upskilling will not work for the masses, which should, unfortunately, lose their jobs over time. Learning AI is not going to secure you a continued position in the job market. Instead, learning AI will give you some foresight into when your position should be mechanized, and will open opportunities for serendipity to happen for the few that are equal parts lucky, high agency, and competent.

That’s what I believe Kaufman is truly alluding to when he suggests Fiverr’s workforce to do whatever it takes to become proficient in AI. That’s also what I believe underlies the April 7th memo of Tobi Lutke (Founder and CEO of Shopify) to his employees, titled “AI usage is now a baseline expectation”, that among other things compels workers to embed AI in all their work, to learn about it fast, and to demonstrate that they cannot use AI to fulfill a new job function before asking for new hires. We’re seeing similar communications coming out from every tech or tech-adjacent company every week now.

For a lot of people the most difficult part of learning about AI is to figure out how to start doing it in the first place. With that in mind, here’s a list of practical suggestions on how best to do it, based on my own personal experience. While these suggestions assume you are not already within the AI community, it is my hope that some of these may prove useful to AI-savvy individuals as well. Written in no particular order:

It goes without saying that it is an absolute necessity for you to become a user of chat-based AI systems like ChatGPT. You must know and use at least five of these: ChatGPT (from OpenAI), Gemini (from Google), Claude (from Anthropic), Grok (from xAI), and DeepSeek. Subscribe to at least two of these (I’d propose ChatGPT and Gemini) and notice their differences. Check out all the different models they offer. They all have strengths and weaknesses, and by identifying them, you will gain some intuition on how they work over time. Important: run at least one Deep Research with ChatGPT’s o3 model and another with Gemini 2.5 Pro, asking for a report on any challenging topic you currently work professionally. If you haven’t seen Deep Research yet, be prepared – the output quality may blow your mind.

It is helpful to understand how the current AI models work on a conceptual basis. No need to understand the details; just get introduced to the concepts. You must know the difference between inferencing and training, and the different hardware demands, as well as time/computational costs, for each of them. Learn what is a token, an embedding, attention, context length, context window, and model temperature. Understand what model parameter size and number of layers mean. Research what is fine-tuning, and then learn the difference between a base model and an instruction-fine-tuned model. Lean the basic steps for an LLM model like GPT4o is created (tip: it includes pre-training, training, and post-training steps). Understand what an API wrapper means in the context of LLMs. For extra points, learn what is retrieval-augmented generation (RAG), model quantization, vector databases, as well as the difference between a diffusion model and a transformer-based model. To learn all of that, it is enough to just ask ChatGPT to explain these things to you like you were a 5-year-old. (Suggestion: just copy and paste this paragraph on ChatGPT and check the results.)

You must get extremely familiar with all major AI labs. The basic players you absolutely must know and have some minimal opinion on are: OpenAI, Google, Microsoft, Anthropic, Meta, xAI, DeepSeek, Qwen, and Mistral. Whatever they do sets trends, move the industry forward, and point to what’s next, both in terms of technical challenges that AI faces, and expectations of features/use cases of AI. Check their leaders on YouTube when they do an interview. Get to know their stated strategies on AI (each of them is pretty unique).

Switch from searching on Google to using a chat-based AI instead whenever you are first researching something. Google’s AI responses from the search bar don’t count; use the real stuff. Use Perplexity at least once in a while. This is likely the future of search and it will give you more intuition on what’s to expect when dealing with AI systems.

In my view, X/Twitter is hands down the best information source for learning about AI. Don’t rely on traditional news for learning as they will be completely worthless. If you are a complete neophyte on AI, you can start with a random search about AI on X. There are a lot of AI influencers screaming to get your attention; most of them are dumb, uninformed, or flat out quackery – but any information is useful for a newbie, even if it’s wrong. You can recognize these influencers easily over time. Most of them write X posts with something like “Here's what we’re learning from working with AI-native clients:”, and then proceed to write a thread of posts after that. Start by reading these threads with an open mind, reading people’s reactions to them. See what piques your curiosity and then try to search it.

By the way, you should absolutely learn how social media algorithms work in general, and how X works in particular. You’ll be able to use this information to your benefit to separate the wheat from the chaff. For learning about AI on X, the algorithm is your friend, so get to know how to influence you to seeing the correct things there. Ask ChatGPT or Grok how best to steer the algorithm towards your target content.

Reddit is also a good tool for learning, although it is a distant second to X. I find it more useful for broadening my knowledge on particular subjects, and for gauging where my own knowledge stacks up against the average users that post on Reddit. If you are an AI newbie, join the r/ChatGPT community and peruse the posts there. If you’re more advanced on AI, I personally gained a lot of information from the r/LocalLLaMA community (dedicated to local hosting of LLMs) in my early days of experimentation.

It is still very relevant for anyone in with interest on AI to learn how to code; you won’t get past the basics if you can’t get your hands minimally dirty. If you’re completely unfamiliar with coding, just think of it as a foreign language and focus on learning Python. Use ChatGPT for it; just ask it how to begin and it will give you the ropes, trust me on this. Extra credit: try running an open-source LLM entirely locally, in your PC. It’s easier than it seems, and you will gain an enormous amount of knowledge and intuition on how AI systems work if you do so. Extra extra credit: figure out the reasons that could lead an organization to run an LLM locally instead of relying on APIs from OpenAI/Google/Anthropic/etc.

Try out the agents that are shaking up the software development market. Install Cursor and see how it works; watch an introductory YouTube video about it if you feel lost in the beginning. Ask it to create a simple app for you and see it working. Notice the fundamental difference between using it and using ChatGPT (which is just this: Cursor directly changes your files, whereas ChatGPT doesn’t).

Find yourself advisors that can help you. By that, I mean people that truly know AI and are always up-to-date with the newest developments. Try to find several of them, not just one. Ask them how what process they follow to keep learning about AI. Ask what you should be reading this week. Do this regularly; set up a weekly cadence if possible. Grab a beer with them once in a while. If you don’t know them, hire them, at great cost if necessary.

All of these suggestions can be summarized in: increase your contact surface with AI. Have skin in the game so that you can gain from AI. Convince yourself that your financial security is at risk, and the way to reduce this risk is by you learning about it deeply.

In conclusion

Let's be clear: the roll-out of tectonic technological shifts often cast long shadows. These processes inherently produces losers, sometimes on a vast scale, potentially redrawing the socio-economic landscape and impacting swathes of the population. This is as true with the power-loom in the 1800s as will be true with AI today. A key difference between the AI tech cycle and most others is that it threatens a social segment never before deemed to be at risk by new technology: the well-educated middle class.

It is important to recognize that I’m only dealing here with first-order consequences of the current state of AI, not their derivative effects; but given the bombastic implications of AI, the socio-economic reactions to these are going to be at least as important as the first-order consequences. At a minimum, new regulations will be put in place to hold this process back or ameliorate its results. Human-exclusive compliance tasks, either self-imposed by AI industry groups or mandated by governments, may to be created, in fields such as AI safety, AI ethics & oversight, and/or AI quality control. Reductions in white collar employment levels should also strain blue-collar labor market as job opportunities dry out and white-collar workers begin competing for lower-education jobs. Social security schemes will be reformulated, tax revenues will change, and geopolitics will evolve, most likely in tortuous and counter-intuitive ways.

Reflexively, external factors are also independently happening and will affect the AI roll-out and its consequences. A war over Taiwan may upend the supply chain required to deploy AI to its fullest extent; energy supply may not be enough to provide for cheap-enough AI everywhere; AI may create value by filling the shoes of aging/retiring populations in places like Europe and Southeast Asia; any number of technological breakthroughs may come about that completely change this panorama.

The future is path-dependent and thus unknowable by design. Things can always unravel in different, more positive, ways, and I surely hope they do. But hope is not a strategy. This text exists as an invitation to design one for yourself, bearing in mind the likely consequences of the world-changing nature of the innovations of our era. The most prudent course of action is to prepare yourself for the brutal reality that there should be blood in labor markets and, while success is in no way guaranteed, the best way to prepare for it is by becoming yourself a leader on AI.

Whether you learn about it or not, AI will affect your life and the lives of people around you. Best of luck to all of us!

I've been using your Vodka model for Stable Diffusion for the last two years, on and off. I like it. I have trained a lot of Stable Diffusion models myself - small ones, Hypernetworks and Embeddings.

To your point of teaching people about AI, I think having people, gather, clean, and label 20-50 images, and then having them create their own hypernetworks, LORAs, and embeddings is a great teaching method. It's really fun to make your own model, and then try and create things with it in Stable Diffusion. Then, you can go back and forth and see, "why is my model only spitting out these types of images, why can't it do this, how is it doing this?" It's fun and pretty educational to understand how these things work.

I've used claude a good bit, too. It's really amazing technology. But, when you use it a good bit, and if I compare it to the small SD models i've made, it's pretty easy to see how narrow the LLMs, and the smaller models, can be sometimes.