Synthetic Data Trains Stable Diffusion Faster?!

Testing the hypothesis, possible reasons, and experimentation

Last week, when working with synthetic data (see the post) to fine-tune Stable Diffusion models, we noticed something interesting. The fine-tuned models felt way overturned, even though we used the usual parameters that we use for the number of images when training for a person. Because of that, we were forced to use very low CFG values to get output that didn’t look “cooked”.

The working hypothesis is that Stable Diffusion tunes way faster when using images generated from Stable Diffusion when compared to the original images and photos.

So today, we will explore this hypothesis and a few things around it:

Let’s check if indeed SD trains faster on images that are generated by Stable Diffusion.

Try to explain why and come up with some ideas and implications

Check a few approaches to replicate this with “real” photos and think about the implications.

Does Synthetic Data Train The Model Faster?

From last week’s experiments, it indeed felt like models were getting trained faster. But there were too many variables - slightly different training parameters, we used a larger dataset in the case of synthetic models, different captions, different images, etc.

So this time we designed a way more controlled test with the following approach:

We start with 20 captioned images from the internet

We fine-tune a base SD 1.5 model with these images

From the fine-tuned model we generate 20 images that resemble the original dataset and caption them in the exact same way

We fine-tune a fresh, base SD 1.5 model with the newly generated synthetic data

Compare metrics and outputs between the two fine-tuned models to see if there is much difference.

This is how the original dataset looked like:

And this is the synthetic dataset that was generated from a fine-tuned model with the goal to get close to that original dataset.

Not quite the same and perfect but close enough. It’s actually quite interesting to look at the two datasets, there is something unusual about having them right next to each other.

Both models were trained with the exact same parameters and indeed, the output from the second, “synthetic” model seems to be more trained. Here it is for you to compare.

The original dataset is clearly undertrained at 80 epochs of 4e-07 learning rate while the “synthetic” model seems well or even overtrained. Even at a 1.5 CFG value.

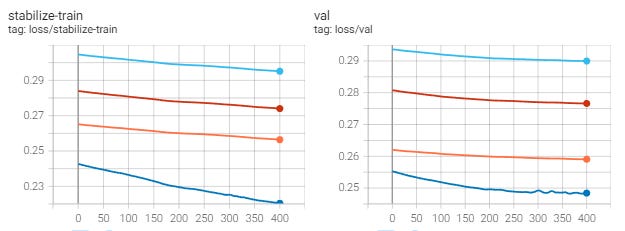

And the hypothesis is further confirmed when looking at the validation logging details of the EveryDream trainer (see more on the methodology of logging). The dataset from Stable Diffusion had lower starting loss values but more importantly, the slope was much steeper and the difference between starting and lower values were higher for both validation and training loss values.

As we can see, in both cases of validation and training loss, the change in starting and lowest values were much greater when using the synthetic data, and the difference was ~2.5x greater.

Why Does it Train Faster?

Honestly, I have no idea. All we can do is come up with some explanations that may or may not make sense. If anyone has better ideas please reach out!

Logically it makes sense, those images are generated by the Stable Diffusion model (despite it being a fine-tuned one) so there is a lot in the image that model already knows about. Yes, the subject is new but everything else both visible and non-visible comes from Stable Diffusion.

From here, we had a few ideas. First, these images all come from a latent space so maybe there is something about that and then the decoding process that makes images easier for stable diffusion to learn. The second, the less well-articulated idea is that since the whole SD process is about removing the noise from the starting noise amounts, there is likely some noise left on these images, and that makes them easier to fine-tune. And third, and the least articulated one - something else about the whole SD process that we are not able to point towards.

Experiments with Latent Space and Noise

At this point, we were both frustrated by the inability to explain exactly what was going on but also excited by the whole possibility of finding a faster way to fine-tune SD models.

So we decided to try two more experiments and see what happens.

Experiment 1: Encoding and Decoding Images

For the first one, we just encoded the original dataset to the latent space and then decoded it back. The captions and training parameters remained exactly the same as the originals. Please note that this experiment was not perfect since we used not the exact same pipeline that ED2 uses in its fine-tuning process so maybe repeating this with higher accuracy could result in better results. The code snippets we used to decode and encode things are from this video (link).

Well, we hoped for something special to happen but it didn’t. Both output images and logs show that the model was learning at almost the exact same speed as with the original dataset.

Experiment 2: Adding Noise to The Images

In this case, we decided to start with the original images and add to them just a tiny bit of noise. The code snippet for this part looked something like this:

encoded = pil_to_latent(input_image)

scheduler.set_timesteps(150)

noise = torch.randn_like(encoded)

sampling_step = 148

encoded_and_noised = scheduler.add_noise(encoded, noise, timesteps=torch.tensor([scheduler.timesteps[sampling_step]]))

Everything else in terms of training parameters etc was kept the same.

And once again we got some disappointing results that are almost identical to the previous experiment.

Conclusions

To conclude the post - there is definitely something interesting here as the model clearly trained faster on the images from SD.

At the very least we can leverage that information and account for it when tuning with synthetic data, especially if we want to mix the non-SD and SD outputs in the dataset. This possibly has implications for the regularization part of the Dreambooth process.

The best case scenario would be to properly understand what’s going on here and potentially use the derived method to pre-process training data before fine-tuning. As we saw a significant increase in the learning speed, this would have some major implications for the whole space.