Vodka V4: Decoupling Text Encoder and UNET Learning Rates

To address the "burnt" look of some Vodka generations, we are experimenting with separate learning rates

Hello FollowFox Community!

TLDR: we attempted to address the “burnt” look of photorealistic generations of Vodka models. We didn’t succeed due to many unexpected changes, but it was a journey full of learning. In the end, we released an updated Vodka model V4.

Check out the model on civitai (link)

While Vodka models (link) have been performing quite well and generating a lot of high-quality outputs, we have been observing one unresolved issue - some of the photorealistic generations looking ‘burnt.’

In this post, we will find the underlying reason, address it and hopefully release a new version of the model with improved performance.

PSA: We are working on a practical introductory course on generative AI. If you like the style of our work and interested in a hands-on, beginner-friendly introduction to the gen-AI space, please express your interest in this waitlist (link).

Diagnostics

Let’s logically connect the facts gathered throughout the experimentation to diagnose the issue.

First of all, this burnt look is not present in the base SD 1.5, meaning that this is something that we introduce with our training process.

We also know that synthetic data trains SD models much faster (link), so it’s unsurprising if the community standard learning rates we have been using are too high.

Interestingly, this frying of our model happens very early in our training, and it doesn’t get much worse as the model trains. This has been consistent throughout our tests (link to v3 test images). Meantime, the model improves a lot with additional epochs on consistency, flexibility, and overall quality. So we can not fix the issue by reducing the number of epochs.

When fine-tuning SD models, we train two models - Text Encoder and UNET. And in our previous experiment (link), we noticed that frying the UNET is much easier when compared to TE. What’s more, the symptoms of fried UNET are quite similar to the issues we observe in Vodka models. At this point, the main hypothesis is that we are burning our UNET early.

To be extra confident, we swapped Text Encoder and UNET (one by one) of a fresh model with the 100 epoch Vodka one and compared the output. And once again, we saw that UNET is the one causing the overtrained visuals.

The Plan and Unexpected Difficulties

Our plan was simple: we decoupled TE and UNET learning rates. We decided to keep Text Encoder at 1.5e-07 and reduce UNET 3x to 5e-8. And based on the results, we could make further adjustments.

We also decided to slightly adjust the tags we added in the last session (link) and moved them from the start of the prompt sentence to the end.

Updates in Training Tools

As we were starting the training process of V4, we decided to update to the latest version of the EveryDream2Trainer. It was quite a large update with many moving pieces: PyTorch was updated to the 2.0 version, bitsandbytes to 38.1, and finally, xformers to 0.20.

And as we launched the training with the usual configs but the updated learning rates - right away, we noticed significant differences from the previous runs. The VRAM usage has decreased quite a bit, and the results after 25 epochs looked significantly more overtrained than before.

Couple this with changes in how the loss was calculated (changed from relative to absolute), and the fact that we didn’t save the branch that we had been using meant that we could not conduct a properly controlled experiment as planned.

This is a good lesson for us in the future to be a bit more careful when conducting such a series of experiments.

Compromised Test

We tried to test a few parameter changes to try and get to the previous training status quo, but we could not find the perfect match. The best solution we found was switching from adamw8bit optimizer to adamw. This meant increased VRAM usage and slower training but resulted in a model resembling our previous tests. Unfortunately, we cannot say that the test is identical; therefore, the overall experiment is somewhat flawed.

Training Process

As discussed, we had a few core updates in the training process, but everything was the same as the previous Vodka models. Here is the summary of the key changes:

Updated EveryDream2Trainer with the new PyTorch 2.0, bitsandbytes 38.1, and xformers 0.20.

Optimizer switched to adamw.

Learning rates decoupled for UNET and Text Encoder and set to Text Encoder at 1.5e-07 and UNET to 5e-8.

Added tags moved from the beginning of the caption text to the end.

As usual, we are uploading all our training parameter files and the training logs on Huggingface (link).

Testing Process

The testing process for the Vodka release process at this point is almost standardized and consists of a few core steps:

Compare the initial four checkpoints (we save at 25 epochs, 100 total) and choose the best one

Compare the chosen checkpoint with the last version of Vodka’s chosen checkpoint to see key differences.

Create the release candidate mixes and choose the best one for the release.

Before we dive into our own takes on these steps, we are uploading all the generated checkpoints and comparison grids in full resolution in case you want to take a look or try to play with different mixes:

Initial 4 checkpoints (link)

Comparison image grids for the initial 4 checkpoints (link)

Comparison of the initial Vodka V4 100 epochs vs V3 75 epochs (link)

Three flavors of Vodka v4 mixes (link)

Comparison images of the three flavor mixes (link)

Comparison of Vodka V4 release version vs the released v3 (link)

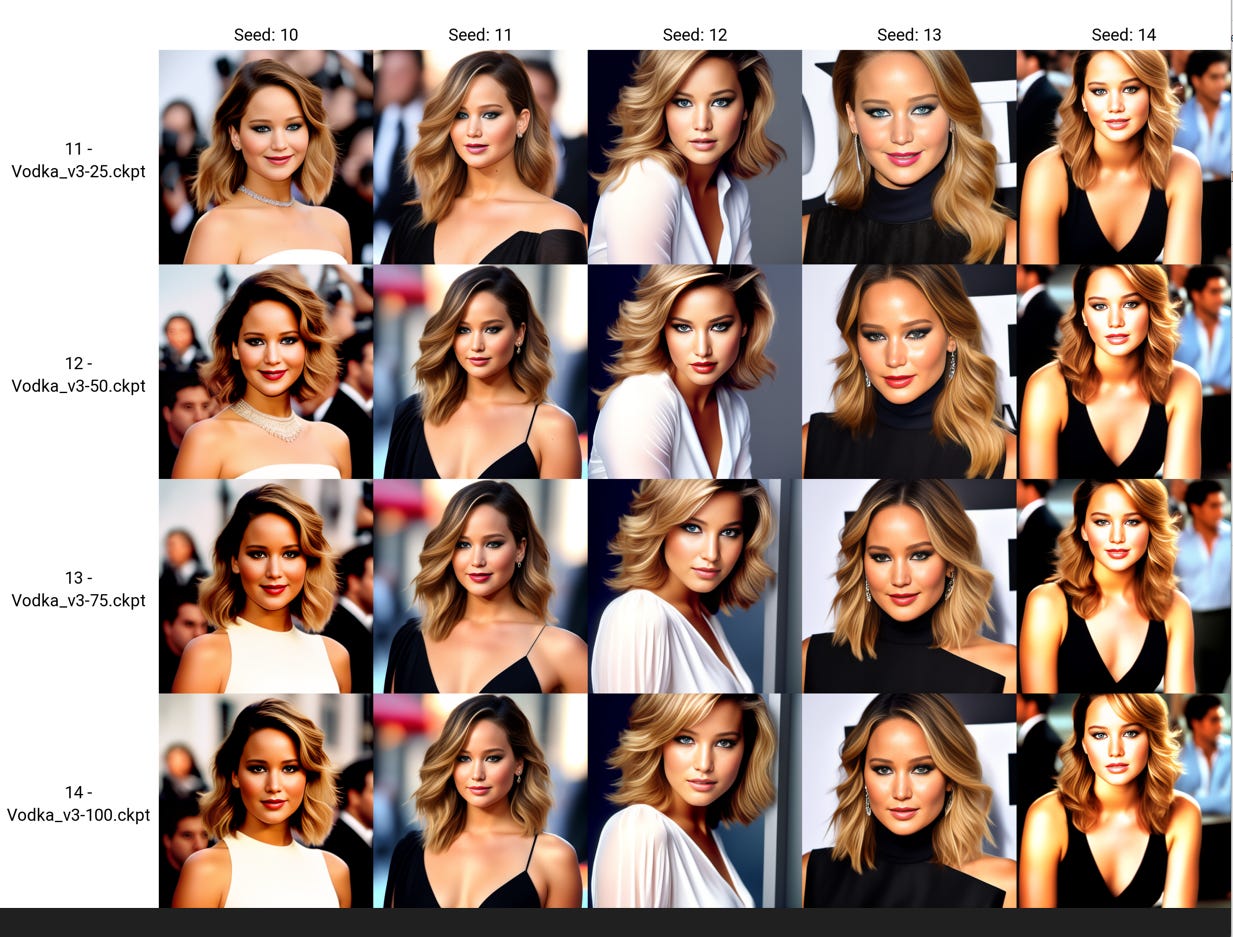

Initial Checkpoints

I don’t think there is much to discuss here except the fact that while all checkpoints looked similar, the 100 epochs one was slightly better almost across all domains. Unfortunately, the traces of our “burnt” problems were still on realistic images, but before cross-comparison, it is hard to say if there was an improvement.

So we chose and proceed with the last checkpoint from 100 epochs.

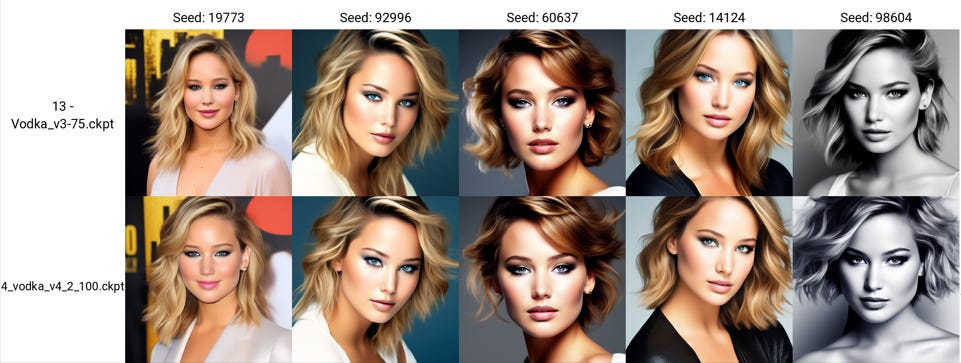

Compare Initial Chosen V4 with the Initial V3

This was the moment of truth to see if we had resolved the issue. Unfortunately, the comparison grid reveals that we did not. The two models look similar, including the realistic generations’ “burnt” look.

We have discussed this before, but it is normal and expected that not all experiments will be successful. Not successful experiments are full of learning, and talking and discussing such journeys is and will be an essential part of the collective advancement of our capabilities.

What’s more, in this particular case, we are not convinced that either the diagnosis or the direction of the solution was incorrect. We seem to be still over-training the UNET early in the fine-tuning process. The combination of all the changes didn’t isolate and address the issue adequately. In the future, we will do further experiments on this - maybe even lower LR for UNET or a slow ramp-up of LR early in training.

At this point, the main question was - do we still release something as a V4?

Mixing and comparing V4 candidates

We knew that V4’s initial checkpoint was not worth a release on its own. But we have a new checkpoint that looks decent but somewhat different from V3. So we returned to our not-popular but useful practice of mixing Vodka models.

V3 and V4 initial checkpoints look quite similar, so we decided not to use V3 in any mixes. However, from the previous post on D-adaptation (link), we have another new checkpoint that looks interesting and different. So we ended up creating three different mixes:

V4 100 epochs mixed with D-adaptation 50 epochs

Same mix as V3 release recipe: V4 initial checkpoint mixed with the difference of V2 and V1.

Kitchen sink: V4 and D-adapt mix combined with v2 and v1 difference.

All three models looked exciting and had their own strengths, but the simplest of the three, V4 mixed with D-adapt, looked the most promising, and we chose it as the release version of V4.

V3 Released vs the Chosen V4 mix

The last comparison and the final go/no-go about the V4 mix was to compare it with the last released Vodka model.

The results of this comparison are mixed. There are individual images where V4 looks better, but also many cases when the opposite is true. Both models have the typical Vodka feel but are still quite different.

In the end, we decided to go ahead and release the V4 and let the audience be the ultimate judge of which one they find more useful and exciting.

Updating Photorealistic LoRA

In one of our previous tests, we trained a LoRA that improved the photorealistic performance of the Vodka V3 (link).

As we decided to release the V4, we re-trained that LoRa with the same setup and got a slightly better performance when using it on V4 than the previous version. So we are releasing it along with the V4 update.

It’s important to say that it's far from perfect after using this LoRA for a while. It seems over-trained, and many faces generated by it look very similar. However, it improves the model’s photorealism and is especially useful when used on non-portrait-type generations (ie, generations that differ from the training data we used).